Behave-Django Monkey Patches

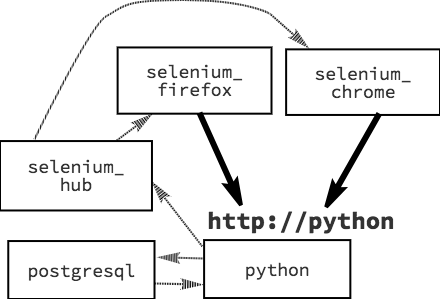

Fri, Aug 10, 2018I’m building a Django dev environment in Docker, and I want to be able to write

Gherkin feature scripts to drive Selenium testing with Chrome and Firefox. I’m

using Docker Compose to orchestrate containers named python, postgresql,

selenium_hub, selenium_chrome, and selenium_firefox. In particular, I’m

using the

selenium/node-chrome-debug

and

selenium/node-firefox-debug

images so that I can open the containers with a VNC client where I can watch the

browsers run and even interact with them when I need to troubleshoot tests. A

third-party Django module called behave-django

smooths the way for integrating the Gherkin tests with Django, but I ran into some difficulties while

setting it up that I have since resolved — at least provisionally — with monkey patches.

localhost refuses to connect

The first problem has to do with networking between containers. Behave-django’s

BehaviorDrivenTestCase class inherits from Django’s

StaticLiveServerTestCase, and when you launch the tests, several things

happen: Django creates a new, empty version of your application’s database, and

it serves a parallel instance of the application over a random free port on

localhost. The python container engages the selenium_hub container, which

then delegates the testing to selenium_chrome or selenium_firefox. Those

web-drivers in turn need to be able to reach the live Django test application.

By default, Django tells them to look on localhost, but since the

application and the drivers are on different containers, localhost is not

going to work. The drivers need to know the name of the application container.

In other words, we need a way to designate python rather than localhost in

the URL that Django uses to share its location with the selenium_hub.

It would be nice if we could simply add a setting to our behave.ini or to use

a command-line option when launching the tests, but I could not find a way to do

either. Alternatively, if we were calling the BehaviorDrivenTestCase in our

own code, then we could modify the instance as soon as we created it. For good

or ill, that all happens behind the scenes; it’s part of the behave-django

module itself, and so it’s not in code that we control.

Fortunately, we’re not out of options. Python lets us modify classes during

runtime, so in our features/environment.py file we can import the

BehaviorDrivenTestCase class and modify it in the before_all function that

runs at the very beginning of each test session. When we change the class this way, it alters

how our instance behaves, even though we are not accessing the instance and even

if the instance already exists. Without modifying the source, we get

to re-write the class on the fly. What’s more, the change propagates to all the running

objects created from the class.

This, then, is the “monkey patch”:

|

|

Coming as I do from a PHP background, I still find it surprising that this works, but we can confirm it for ourselves in an interactive Django shell.

|

|

After we modify the class, we can see that the instance has also changed.

Of course modifying classes this way could have all kinds of unintended and undesirable consequences. The weaknesses of monkey-patching include:

- We are relying on internals of the class we are patching, internals that could easily change as part of an upgrade.

- We’re going to affect all running objects that are derived from the class we are modifying, so we might break something.

- We might make it difficult to debug our code. The changes we are making could be mysterious to someone who doesn’t know about our patch.

Even so, I feel that the use here is relatively unproblematic.

BehaviorDrivenTestCaseis a highly-specific class that is meant only for testing, and we are the only ones who should be doing any of that.- We’re putting our patch right in the place where we are putting several other more ‘legitimate’ settings related directly to our testing.

- Our patch will only ever run as part of a test. If we do break something, it will be our own testing.

reset_sequences

django.db.utils.IntegrityError: duplicate key value violates unique constraint "users_user_pkey"

DETAIL: Key (id)=(2) already exists.

Setting the host to python solved my connection problem, but other woes were

just around the corner. After I had written a few tests I started to encounter an odd

error with the text above at the end of a long stack trace. By pausing the test

during execution and examining the database I was able to find that in some

cases the first user created during a test case would have id=2 rather than

id=1. That wouldn’t have mattered except that the second user then also wanted

id=2. After a bit of searching I found that I might need to set

reset_sequences to True in my test class. Adding the following line to my

environment.py file did the trick:

|

|

serialized_rollback

django.db.utils.IntegrityError: insert or update on table "users_user" violates foreign key constraint "users_user_auth_id_f64b924a_fk_users_authmethod_slug"

DETAIL: Key (auth_id)=(local) is not present in table "users_authmethod".

A third problem arose only because I was using data migrations to

pre-populate some tables. I have a custom user model with fields like

institution and authmethod. The possible values are known in advance, so I have

manually created some data migrations to populate corresponding tables. In the

user table a foreign key then references the appropriate table.

For the first test that runs everything is fine, but then the test runner

completely clears the database, including those pre-populated tables. If you are

trying to create a user with a default value for one of those now empty fields,

you’ll violate the foreign key constraint. To fix this we need to set

serialized_rollback to True. That gives us the following:

|

|

And with that, everything finally seems to be working.

CI

It took some time, but the payoff has been high, not least of all because of a nice little windfall: Using the same Docker stack, it has proven relatively easy to set up automated CI testing in a GitLab environment.